Who are we?

2021-10-05

Greetings

Relation to R

What’s in store?

Attention to visualization principles while digging into the R universe

Also… possibly “great frustration and much suckiness…” - Hadley Wickham

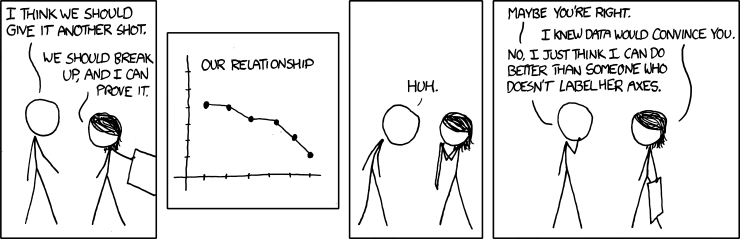

Data Viz: Truthful Art?

Cairo (from introduction): A good visualization is

- reliable information,

- visually encoded so relevant patterns become noticeable,

- organized in a way that enables at least some exploration, when it’s appropriate,

- and presented in an attractive manner, but always remembering that honesty, clarity, and depth come first.

Wilke (from Chapter 1): Data visualization is part art and part science. [Avoid being…]

- ugly (aesthetics)

- bad

- wrong (accuracy)

Data visualization is essential for data exploration, communication, and understanding. Imagine we have a small dataset with the following summary characteristics:

A set of summary statistics is at best a partial picture, until we see what it looks like.

## # A tibble: 1 × 6 ## dataset mean_x mean_y std_dev_x std_dev_y corr_x_y ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 1 54.3 47.8 16.8 26.9 -0.0641

Imagine another data set with the following summary characteristics… and scatterplot…

## # A tibble: 1 × 6 ## dataset mean_x mean_y std_dev_x std_dev_y corr_x_y ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 2 54.3 47.8 16.8 26.9 -0.0686

But wait! There’s more!

## # A tibble: 1 × 6 ## dataset mean_x mean_y std_dev_x std_dev_y corr_x_y ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 3 54.3 47.8 16.8 26.9 -0.0645

R, RStudio

R is the computational engine; RStudio is the interface

Organizing R

For any new project in R, create an R project. Projects allow RStudio to leave notes for itself (e.g., history), will always start a new R session when opened, and will always set the working directory to the Project directory. If you never have to set the working directory at the top of the script, that’s a good thing![^2]

And create a system for organizing the objects in this project!

R Packages

Functions are the “verbs” that allow us to manipulate data. Packages contain functions, and all functions belong to packages.

R comes with about 30 packages (“base R”). There are over 10,000 user-contributed packages; you can discover these packages online in Comprehensive R Archive Network (CRAN), with more in active development on GitHub.

To use a package, install it (you only need to do this once)

- You can install packages via point-and-click: Tools…Install Packages…Enter

tidyverse(or a different package name) then click on Install. - Or you can use this command in the console: install.packages(“tidyverse”)

In each new R session, you’ll have to load the package if you want access to it’s functions: e.g., type library(tidyverse).

Practice

Let’s start working in R!

- Go to class website and download the zip folder. Put it anywhere.

- Go to the unzipped folder and click the week1_materials.Rproj file to launch a new R session in RStudio

dplyr

Part of the the tidyverse, dplyr is a package for data manipulation. The package implements a grammar for transforming data, based on verbs/functions that define a set of common tasks.

Functions/Verbs

dplyr functions are for data frames.

- first argument of

dplyrfunctions is always a data frame - followed by function specific arguments that detail what to do

Isolating data

\(\color{blue}{\text{select()}}\) - extract \(\color{blue}{\text{variables}}\)

\(\color{green}{\text{filter()}}\) - extract \(\color{green}{\text{rows}}\)

\(\color{green}{\text{arrange()}}\) - reorder \(\color{green}{\text{rows}}\)

select()

Extract columns by name.

select(property, yearbuilt)

select() helpers include

- select(.data, var1:var10): select range of columns

- select(.data, -c(var1, var2)): select every column but

- select(.data, starts_with(“string”)): select columns that start with… (or ends_with(“string”))

- select(.data, contains(“string”)): select columns whose names contain…

filter()

Extract rows that meet logical conditions.

filter(property, cardtype == "R" & yearbuilt > 2020)

| Logical tests | Boolean operators for multiple conditions |

|---|---|

| x < y: less than | a & b: and |

| y >= y: greater than or equal to | a | b: or |

| x == y: equal to | xor(a,b): exactly or |

| x != y: not equal to | !a: not |

| x %in% y: is a member of | |

| is.na(x): is NA | |

| !is.na(x): is not NA |

arrange()

Order rows from smallest to largest values (or vice versa) for designated column/s.

arrange(property, yearbuilt)

Reverse the order (largest to smallest) with desc()

arrange(property, desc(yearbuilt))

and more…

\(\color{green}{\text{slice()}}\) - extract \(\color{green}{\text{rows}}\) using index(es)

\(\color{green}{\text{distinct()}}\) - filter for unique \(\color{green}{\text{rows}}\)

\(\color{green}{\text{sample_n()/sample_frac()}}\) - randomly sample \(\color{green}{\text{rows}}\)

pipes

The pipe (%>%) allows you to chain together functions by passing (piping) the result on the left into the first argument of the function on the right.

To get the totalvalue and finsqft for property built in 2020 arranged in descending order of totalvalue without the pipe we could nest the functions

arrange(

select(

filter(property, yearbuilt == "2020" & cardtype == "R"),

totalvalue, finsqft),

desc(totalvalue))

Or run each and save the intervening steps

tmp <- filter(property, yearbuilt == "2020" & cardtype == "R") tmp <- select(tmp, totalvalue, finsqft) arrange(tmp, desc(totalvalue))

With the pipe, we call each function in sequence (read the pipe as “and then…”)

property %>% filter(yearbuilt == "2020" & cardtype == "R") %>% select(totalvalue, finsqft) %>% arrange(desc(yearbuilt))

Keyboard Shortcut!

- Mac: cmd + shift + m

- Windows: ctrl + shift + m

Deriving data

\(\color{blue}{\text{summarize()}}\) - summarize \(\color{blue}{\text{variables}}\)

\(\color{green}{\text{group_by()}}\) - group \(\color{green}{\text{rows}}\)

\(\color{blue}{\text{mutate()}}\) - create new \(\color{blue}{\text{variables}}\)

summarize()

Compute summaries. Summary functions include

property %>%

filter(yearbuilt == "2020" & cardtype == "R") %>%

summarize(smallest = min(finsqft),

biggest = max(finsqft),

total = n())

- multiple summary functions can be called within the same summarize();

- we can give the summary values new names (though we don’t have to);

| Summary Functions | |

|---|---|

| first(): first value | last(): last value |

| min(): minimum value | max(): maximum value |

| mean(): mean value | median(): median value |

| var(): variance | sd(): standard deviation |

| nth(.x, n): nth value | quantile(.x, probs = .25): |

| n_distinct(): number of distinct values | n(): number of values |

group_by()

Groups cases by common values of one or more columns.

property %>%

filter(yearbuilt == "2020" & cardtype == "R") %>%

group_by(esdistrict) %>%

summarize(smallest = min(finsqft),

biggest = max(finsqft),

avg_value = mean(totalvalue, na.rm = TRUE),

number = n()) %>%

arrange(desc(avg_value))

mutate()

Create new columns or alter existing columns

property %>%

filter(yearbuilt == "2020" & cardtype == "R") %>%

mutate(finsqft = as.numeric(finsqft),

value_sqft = totalvalue/finsqft) %>%

group_by(esdistrict) %>%

summarize(smallest = min(finsqft),

biggest = max(finsqft),

avg_value = mean(totalvalue, na.rm = TRUE),

number = n()) %>%

arrange(desc(avg_value))

- muate new variables as functions of other variables (ratios, conditions, ranks, etc.).

- mutate multiple variables in the same command.

- mutate based on condition:

if_else,case_when

and more…

\(\color{blue}{\text{tally()}}\) - short hand for summarize(n())

\(\color{blue}{\text{count()}}\) - short hand for group_by() + tally()

\(\color{blue}{\text{summarize(across())}}\) - apply summary function to select \(\color{blue}{\text{variables}}\)

\(\color{blue}{\text{mutate(across())}}\) - apply mutate function to select \(\color{blue}{\text{variables}}\)

\(\color{blue}{\text{summarize(across(where()))}}\) - apply summary function to \(\color{blue}{\text{variables}}\) by conditions

\(\color{blue}{\text{rename()}}\) - rename \(\color{blue}{\text{variables}}\)

\(\color{blue}{\text{recode()}}\) - modify values of \(\color{blue}{\text{variables}}\)

factors

Factors are variables which take on a limited number of values, aka categorical variables. In R, factors are stored as a vector of integer values with the corresponding set of character values you’ll see when displayed (colloquially, labels; in R, levels).

property %>% count(condition) # currently a character

property %>%

mutate(condition = factor(condition)) %>% # make a factor

count(condition)

# assert the ordering of the factor levels

cond_levels <- c("Excellent", "Good", "Average", "Fair", "Poor", "Very Poor", "Unknown")

property %>%

mutate(condition = factor(condition, levels = cond_levels)) %>%

count(condition)

forcats

The forcats package, part of the tidyverse, provides helper functions for working with factors. Including

- fct_infreq(): reorder factor levels by frequency of levels

- fct_reorder(): reorder factor levels by another variable

- fct_relevel(): change order of factor levels by hand

- fct_recode(): change factor levels by hand

- fct_collapse(): collapse factor levels into defined groups

- fct_lump(): collapse least/most frequent levels of factor into “other”

ggplot2: Mapping Aesthetics

The Grammar of Graphcis: All data visualizations map data to aesthetic attributes (location, shape, color) of geometric objects (lines, points, bars)

- Quantitative (continuous, discrete, time) mapped to position, shape size/line width, color, transparency, …

- Qualitiative (ordered, unordered, text) mapped to position, shape/line type, color

Scales control the mapping from data to aesthetics and provide tools to read the plot (axes, legends). Geometric objects are drawn in a specific coordinate system.

A plot can contains statistical transformations of the data (counts, means, medians) and faceting can be used to generate the same plot for different subsets of the data.

Hadley Wickham, ggplot2: Elegant Graphics for Data Analysis

Strategies

ggplot(data, aes(x = var1, y = var2)) +

geom_point(aes(color = var3)) +

geom_smooth(color = "red") +

labs(title = "Helpful Title",

x = "x-axis label")

# geom_histogram(), geom_boxplot(), geom_bar(), etc.

- Building layers (data, geoms, scales, labels, etc.)

- Mapping versus setting aesthetics

- Global versus geom-specific aesthetics

Wilke example

Daily temperatures

head(ncdn_long)

## # A tibble: 6 × 10 ## station date name month day avg_tmp max_tmp min_tmp location dates ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <fct> <date> ## 1 USW00003759 01-01 CHAR… 01 01 36 43.5 28.5 Charlot… 0000-01-01 ## 2 USW00003759 01-02 CHAR… 01 02 35.9 43.4 28.4 Charlot… 0000-01-02 ## 3 USW00003759 01-03 CHAR… 01 03 35.8 43.3 28.3 Charlot… 0000-01-03 ## 4 USW00003759 01-04 CHAR… 01 04 35.7 43.2 28.2 Charlot… 0000-01-04 ## 5 USW00003759 01-05 CHAR… 01 05 35.6 43.1 28.1 Charlot… 0000-01-05 ## 6 USW00003759 01-06 CHAR… 01 06 35.5 43 28 Charlot… 0000-01-06

ggplot(ncdn_long, aes(x = dates, y = avg_tmp, color = location)) +

geom_line(size = 1) +

scale_x_date(name = "month", date_labels = "%b") +

scale_y_continuous(limits = c(15, 95),

breaks = seq(15, 95, by = 20),

name = "temperature (°F)") +

labs(title = "Average Daily Normal Temperatures")

Monthly average temperatures

head(mean_ncdn)

## # A tibble: 6 × 3 ## location month mean ## <fct> <fct> <dbl> ## 1 Houston Jan 53.8 ## 2 Houston Feb 57.8 ## 3 Houston Mar 63.8 ## 4 Houston Apr 69.9 ## 5 Houston May 77.3 ## 6 Houston Jun 83.0

ggplot(mean_ncdn, aes(x = month, y = location, fill = mean)) +

geom_tile(width = .95, height = 0.95) +

scale_fill_viridis_c(option = "B", begin = 0.15, end = 0.98,

name = "temp (°F)") +

scale_y_discrete(name = NULL) +

coord_fixed(expand = FALSE) +

theme(axis.line = element_blank(),

axis.ticks = element_blank()) +

labs(title = "Average Monthly Normal Temperatures")

One more example

All of the code for his book is on github https://github.com/clauswilke/dataviz